Connect, Control, Succeed: How Adapter empowers connected mobility

26 Jul 2023 | 4 min read

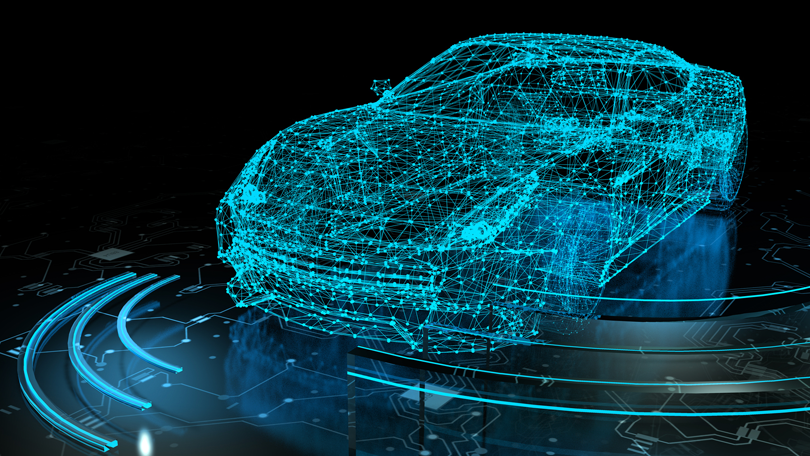

For a Car Rental or Peer-to-Peer Car-Sharing company, having complete control over the fleet is key for a successful management of the business.

To be able to control the fleet and the status of cars, Car Rentals or Peer-to-Peer businesses need to embrace a tech-based approach and adopt a digital strategy around car connectivity.

Each car has its own language, standardizing all languages used by connected cars is crucial for the implementation of a digital strategy and for allowing access to extremely important functions such as vehicles’ interactions.

Having a connected car makes remote interactions such as lock and unlock of doors possible, making the rental process for both a Car Rental company and a Peer-to-Peer car sharing way smoother, immediate and technologically advanced. Giving customers faster and contactless vehicle access!

And how does this work?

In this article we will break down how the integration of commands takes place on a technical level thanks to 2hire technology.

👀 Stay tuned for the next episode about signals 👀

Step 1: Vehicle Compatibility Check with 2hire

As you may already know, the 2hire service that enables vehicle connectivity is called Adapter. The first step in integrating Adapter’s API with your own service and ecosystem is to determine the compatibility of the vehicles in your fleet with our solution.

There are two possibilities for integrating your vehicles through our product: your vehicle can either be natively connected, as most recent models come with embedded connectivity by the manufacturer, or it can be a non-connected vehicle eligible for installation of our 2hire box.

To obtain more information about the manufacturers or models supported and to inquire about the compatibility of your vehicles, please contact our business unit.

Step 2: Develop Your Integration through 2hire Adapter Development Portal

Once you have confirmed the compatibility of your vehicles, the next step is to integrate the Adapter API. The real power of Adapter lies in its ability to expose a unified and homogeneous interface for interacting with a diverse range of vehicles from different manufacturers and models. From a Mercedes Vito to a Fiat 500 the difference is not so steep, with Adapter you manage both in the same way.

To facilitate the development of your integration, you can utilize our Developer Portal and simulate real vehicles in our test environment. The API Reference tab contains four sections:

Prod API: This collection directly connects to our production service, allowing you to interact with your real vehicles if you already have your production credentials.

Integration Test: This set of APIs is designed to mock responses from our server. The responses are static and dependent on the input parameters, making it useful for studying our service or for early-stage development.

Test API: These endpoints enable you to develop and test your application. The service points to a special Test environment that replicates the behavior of our Production servers. You can set up mock vehicles to simulate different scenarios.

E2E Test: These endpoints allow you to create and control mock vehicles for use with the Test API.

Once you have completed the implementation and thoroughly tested it using the Test API, you are ready to switch to the Production routes.

For the scope of this post, we will focus on the development phase using our Test API and E2E tests. This integration process involves:

- Creating and registering your test vehicle,

- Integrating start and stop functionalities for testing purposes,

- Connecting to the data received from the vehicles.

Want to have a chat about it? Contact us and let’s book a product demo!

Let’s delve into each aspect:

Step 2.1: Sign up and Authentication

The first step is to sign up on our Developer Portal. This will provide you with a client ID and secret to obtain tokens for authenticating your calls to our service.

Please note that those credentials are for the Test environment only, so they will only work with the Integration Test, Test API and E2E Test endpoints.

a. Registering a Test Vehicle

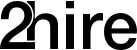

To initiate the integration process, you need to create a mock vehicle for testing and development purposes. You can easily accomplish this using our convenient E2E test section.

The vehicle creation endpoint will return a set of ids: the identifier can be used with the E2E test endpoints to control the newly created mock vehicle, the other id (the name may vary for each connectivity provider, e.g.: qrCode for 2hireBoard) will be used to register the vehicle with the Test API.

After creating the mock vehicle, you must register it through the Test API using the registration endpoint. This step enables the Adapter Test environment to recognize and establish a connection with your vehicle.

For this example, we will use a 2hireBox mock vehicle. To create it, simply use the create vehicle endpoint, leaving the default body parameters. You can conveniently call the endpoint within our Developer Portal.

As already mentioned, the response will provide the list of device IDs to be used in the registration endpoint. Specifically, for the 2hireBox mock, you need to retrieve the QR code.

Additionally, to register a 2hireBox, you must specify a profile ID.

Please note that different connectivity providers may have additional required properties aside from the vehicle identifier, so make sure to check any specific integration requirements.

To quickly obtain a profile ID suitable for testing purposes, you can use the public profile list endpoint.

Once you have both the QR code and profile ID, you can proceed with registering the mock vehicle in the test environment.

b. Integrating the Generic Commands

Next, we will integrate the generic commands exposed by Adapter, namely the Start, Stop, and Locate commands. We will focus on the start and stop commands as they are the fundamental commands for controlling the vehicle and are intuitively used to initiate or conclude a trip.

Depending on the vehicle model, the start command will execute the necessary procedures to enable the user to access the vehicle and start driving. Adapter takes care of all the required work to prepare the vehicle for the trip, so it is transparent to you. The same applies to the stop command, which handles all the necessary actions to conclude the trip and shut down the vehicle.

It is important to implement proper error handling on your side. Commands may fail for various reasons, and your application should react based on your own business logic. In our Developer Portal, you will find a comprehensive list of status codes and error messages that may arise from each command. We provide standardized error codes, but there will always be a cause field specified in the response.

We encourage you to extensively test different scenarios by changing the vehicle status through the E2E test suite to observe the generated responses.

Step 3: Transition to Production

After successfully completing the integration and testing phase, you are ready to transition to the production environment. This involves checking eligibility for production, registering your vehicles in the live system, and utilizing the full suite of 2hire Adapter functionalities. You can test each endpoint beforehand using our Developer Portal.

a. Authentication

The authentication process in production is the same as in the Test suite. However, you will need to obtain your production credentials from your business referent.

b. Vehicle Registration

Once your vehicles are deemed eligible, you can proceed with the registration process in the live system. Make sure to gather all the necessary information for registering your vehicles based on the selected connectivity provider, ensuring a smooth registration without any delays. You can find all the details in the Developer Portal.

c. Utilizing the Production Suite of 2hire Adapter

Once registered, you can start interacting with your real vehicles through Adapter’s functionalities, including data exposure and command forwarding, which were developed using the Test suite.

Step 4: Integrating Adapter into Your Car Rental/Peer-to-Peer Car Sharing Product

Congratulations! You have successfully integrated the Adapter generic commands into your car rental or peer-to-peer car sharing platform. Now you can implement your own business logic while leaving the “hard work” of interacting with your vehicles to Adapter. With just one integration, you can connect, manage, and control various vehicles from different manufacturers, as long as they are compatible with our service. How cool is that?

Stay tuned for the next installment in this series, where we will delve deeper into the advanced features and customization options offered by 2hire Adapter.

Curious to know more about how 2hire technology to power your mobility business?

About the author

Veronica Iovinella

Senior Engineer at 2hire

Computer engineer with a passion for art. When not coding, you may find me painting, reading, binge-watching tv shows, or, most probably, sleeping.

Posted on July 19, 2023 by Benedetta Biggi

The lifetime of a Story

27 Mar 2023 | 4 min read

Managing a dev team can be a complex task, especially when there are multiple teams working on different projects. In such scenarios, using Agile and Scrum methodologies can help streamline the development process and ensure everyone is on the same page. I would like to discuss with you today how our company 2hire, and specifically the Phoenix development team, manages our workload and collaborates using Agile and Scrum methodologies.

Wait… What are Agile and Scrum?

It is no secret that managing dev work is hard, with lots of unknown and unexpected issues coming up when you least need them. Many great people tried to figure out the best way to schedule dev work, keep track of the progress, and react immediately to the unforeseen. Finally, it is the early 2000s when the Agile Manifesto is actually released. Agile is a software development methodology that focuses on delivering value to the customer through iterative and incremental development. It emphasizes collaboration, flexibility, and rapid response to change. Scrum is a particular Agile framework that provides a structured approach to software development. It involves a set of ceremonies that help teams organize and manage their work. As it sets a guideline to implement Agile, it is not uncommon to find many different versions or variations of Scrum, and we are no exception.

The Phoenix Team

The Phoenix team is one of several dev teams in 2hire. Like most dev teams we are structured as: Team Leader, Team Product Manager, Developers, and Product Owner. The Team Leader oversees the development process, while the Team Product Manager communicates with stakeholders. The developers work on completing the stories, and the Product Owner prioritizes the product backlog. At this moment, we can count 5 developers, 1 Team Leader, 1 Product Manager and 1 Product Owner, making up a team of 8 members.

We follow most of the Scrum ceremonies but we are not very strict to apply every single rule. As an example, we work in a one-week “Sprint”. In Scrum, a Sprint is a fixed period of time during which the team works to deliver a potentially releasable increment of product functionality. It involves several other fixed meetings to define the stories and tasks from the Backlog to be completed during the Sprint (Sprint Planning) and finally to present the results (Sprint Review). We as a team are not too focused on strictly following the Sprint definition, we use it more as a tool to keep track of the work in progress.

Instead of a Sprint Planning meeting, we hold our Refinement meetings on Mondays: this is a 1 hour meeting in which we discuss new stories to be added from the Backlog to the To-Do list. The stories are presented to the team and prioritized based on their importance and urgency. Then, the team decides the commitment for the week, which includes both hard and soft commitments: we are not compelled to a strict pledge of completing all the tasks within the week, rather it is more of a challenge we pose to ourselves as a team to meet our desired goal. We set in hard commitment all the stories we feel confident about, and use the soft commitment for the stories that may have some unknown issues or blockers.

What do we mean by “stories”?

In Scrum, a “story” is a high-level requirement for a feature or functionality that is being developed. User stories are written on index cards or in a digital tool and added to a Backlog, which is a prioritized list of all the features and requirements for the product. Typically, during Sprint planning meetings, the team selects a subset of stories from the Backlog to work on during the upcoming Sprint.

In our case, we define stories by giving a background or general description of the requirement, and then we describe in detail the Acceptance Criteria (AC), which are a set of conditions that must be met to consider the story complete. We are mostly flexible while working on a story, so whenever we find some AC cannot be met or must be changed for any reason, we steadily talk about it during our daily standups and take action.8

Daily Standups

Every day of the week, we hold a quick daily standup meeting (from 15 to 30 minutes at most) to check the progress on the stories and coordinate our work. During the meeting, we discuss any blockers or issues we are facing, as well as offer help to team members who need it. This ensures that everyone is aware of what is going on and that we are all working towards the same goal.

Story Phases

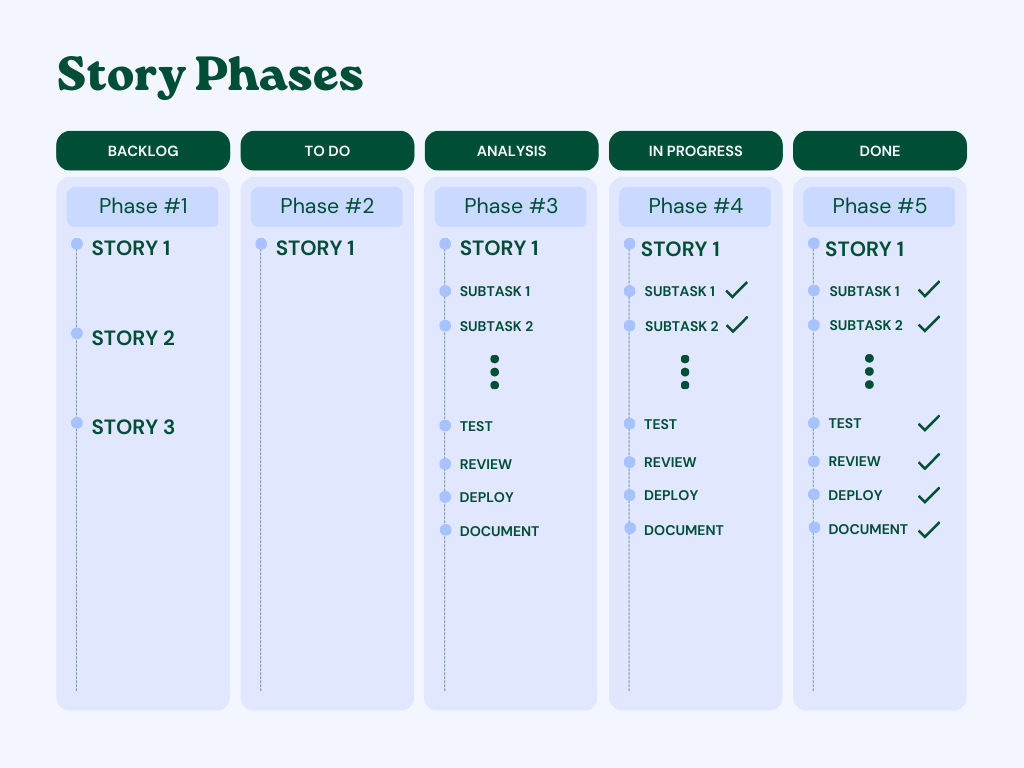

Each story in our “Sprint” goes through several phases. First, during the Refinement meeting, it is added from the Backlog to the To-Do column of our Kanban board. Then, we move it to the Analysis column and start analyzing it, planning subtasks, and identifying possible blockers. Next, we move it to the In-Progress column, where we work on it and update the subtasks as we go.

Test, Review, Deployment, and Documentation

Once all the subtasks are done, we go through a few additional but mandatory steps, such as tests, review, deployment, monitoring, and documentation. The only exception is for Spike stories, which are stories involving only study cases, investigations or analysis that would skip the test, review and deployment steps. Before concluding the story, we always spend some time contributing to the test coverage of our app. Most of the time we will add significant test cases to our end to end test suites, but on other occasions we may find it useful to add unit tests. It is important to always pay attention to any edge cases, as well as including regression tests to prevent future bugs. The review is usually done by a team member who did not work on the story. This ensures that the code is of high quality and meets our standards, as well as double checking all the requirements. The deployment is the process of moving all the changes to production. We also monitor the changes to ensure everything is working as expected. Finally, we document all our stories in our company Notion account: we write a new document for every story, reporting the story description, how we implemented it, any error or problem we encountered and often concluding with next steps to follow up, which may lead to new stories to be added in the Backlog.

Demos

In a typical Scrum fashion, every two weeks we hold Demo meetings to present all the stories completed over the two-week period. This 1-hour meeting has the same purpose as the classic Sprint Review meeting, even though we cannot always present our work to stakeholders. Instead, we take the chance to share important information between team members, to make sure we are all informed about every new feature so that anyone can take over working on different projects or epics. Moreover, it often becomes the right occasion to discuss together the proposed next steps for the stories, even drafting new follow up stories entirely.

On Being Collaboratively Selfish

I would like to spend a few more words on what I find the most interesting approach we put up in our team, which is also what makes the whole system work. In addition to working on stories individually, the Phoenix team often works in pairs to speed up the development process and reduce the likelihood of errors. This helps us to achieve our commitments more efficiently and effectively. Moreover, we have developed the concept of being “collaboratively selfish“, where we strongly encourage team members to ask for help directly when they need it. Even when we think we may disturb others or be a burden, we think it is better to call out to someone rather than keep trying alone. This attitude helps us work together as a team and avoid unnecessary delays or bottlenecks. It also helps us to maintain a positive work environment where everyone feels supported and valued. We understand that sometimes we may be too focused on our own tasks, but it’s important to take time out to help team members as needed. This collaborative approach ensures that everyone is working towards the same goal and helps us to achieve better outcomes. By working collaboratively and promoting this “collaboratively selfish” culture, we can continue to achieve our goals and deliver high-quality work.

Our key to success

The Phoenix dev team worked hard to find the right balance to manage our work efficiently, creating our own framework to handle all the workload and the unexpected. Our daily standups, story phases, and mandatory steps ensure that our work is of high quality and meets our standards. The demos allow us to get feedback and share among us the knowledge we mature while working on the stories. But, most importantly, we found a way to collaborate that puts first the wellness and the success of the whole team, without leaving anyone behind.

💡 2hire glossary 💡

Want to join the team? 😎🚀

About the author

Veronica Iovinella

Senior Engineer at 2hire

Computer engineer with a passion for art. When not coding, you may find me painting, reading, binge-watching tv shows, or, most probably, sleeping.

Posted on March 16, 2023 by Benedetta Biggi

How does 2hire API Layer Adapter work?

07 Mar 2023 | 4 min read

How does Adapter work?

In our first opening chapter about Adapter we showcased the capabilities of the software solution that 2hire is offering, and we launched an initial lure into the world of standardization of all the different OEMs in the market to enable connected services providers to communicate indistinguishably with all these OEMs. Today we thought we would put a spotlight on Adapter’s technology stack and dive a bit deeper into how Adapter’s architecture actually works.

Adapter is the layer that enables communication between vehicles and end users. This cloud-based technology handles interactions from thousands of users engaging with thousands of connected cars all over the world. But how exactly does Adapter work? Let’s take a closer look at its components and mechanisms in a light-hearted way!

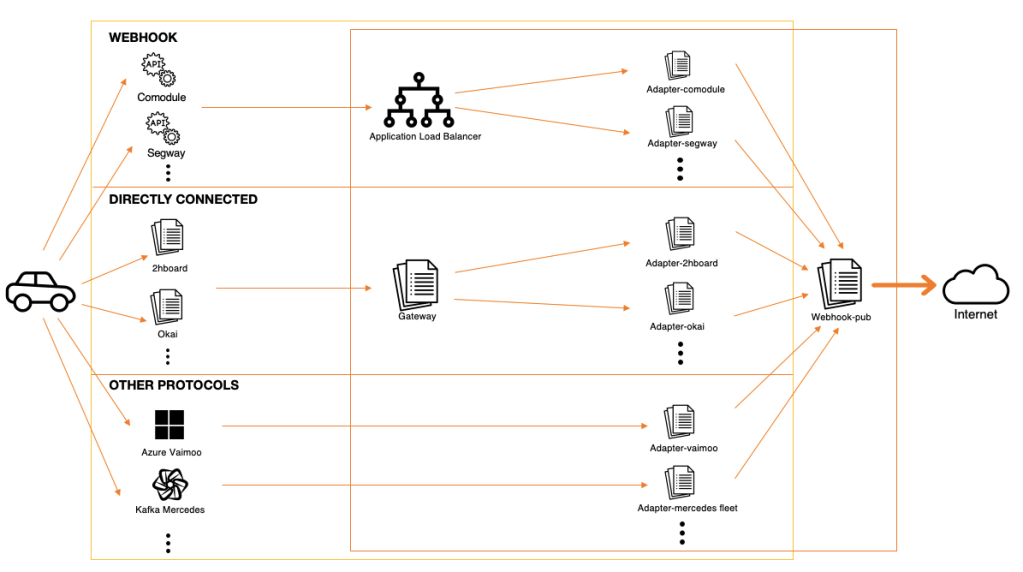

Services composing Adapter

You might think that Adapter is just one piece of software, but it is actually a cluster of microservices working together to expose a unified and compact interface. There are around 20 different services that contribute to the Adapter architecture, and they’re growing very fast.

Let’s go over the microservices composing Adapter:

ALB (Application Load Balancer)

The ALB is a very common component in many of the services we use every day on the internet. Its responsibility is to distribute request loads over multiple replicas serving your application.

It’s crucial to ensure that the system can handle the high volume of incoming requests, particularly during peak times, by balancing the load evenly among the replicas. This not only improves the overall performance of the system but also provides a failover mechanism in case one of the instances goes down.

API Gateway

The API Gateway is the second component in the pipeline for communication between users and vehicles. It acts as the gatekeeper for incoming requests from the end-user. It’s responsible for checking the authentication of each request, verifying the user’s identity, and forwarding it to the correct sub-service within the Adapter. The API Gateway also performs other security-related functions, such as rate limiting and request throttling, to ensure the overall security of the system.

Adapter

This macro-category (yes, Adapter in Adapter) is the heart of the Adapter service and refers to all the services that enable specific vehicle integrations. Right now there are around 15 different services under this category, including integrations with original car manufacturers such as Stellantis, Ford, Toyota, third-party providers such as E-GAP, Reefilla, WashOut, as well as the most important integration which is our proprietary 2hire box. The Adapter services form the core of the Adapter architecture: each service handles the communication with specific vehicles, processing the incoming commands or signals and forwarding them to the correct destination.

Gateway

Like our 2hire box, even other integrations require a direct connection with the vehicle rather than through provided API services. The Gateway service handles direct communication with the vehicles, managing every aspect of it, from protocol connections to encoding and parsing messages.

Webhook Publisher

The Webhook Publisher service closes the loop by forwarding all information from the vehicle to the end-user. The forwarded data is usually received and handled either by third-party services or our own Sharing service, which processes the data to make it available to the end user through their app.

Interactions between Services

To understand how the different services within Adapter interact with each other, let’s take a closer look at the two communication flows: from the User to the Vehicle, and from the Vehicle to the User.

User to Vehicle

When a User interacts with a Vehicle through their app of choice, the message is sent over to the cloud and routed to the Application Load Balancer (ALB). The ALB distributes the user-generated message load to the correct service replica. From there, the message is processed by the Api Gateway. This service acts as a checkpoint, verifying the authenticity of the message and directing it to the appropriate Adapter sub-service.

The selected Adapter then processes the command and forwards it either to the Provider API, if the message is meant for a third-party service, or to our proprietary Gateway service, if the message is meant for a vehicle powered by the 2hboard or another directly connected vehicle.

Vehicle to User

On the other end, depending on the connectivity provider, the vehicle data is either received through directly connected webhooks that pass through the ALB, or through the Gateway service that handles the communication specification. The parsed messages are then sent to the corresponding Adapter Service, which performs any necessary processing. This could include exposing command responses and received signals, such as generic signals for common usage or specific signals for the vehicle family or group.

Finally, to forward the vehicle data to the end-user, the Adapter services utilize the Webhook Publisher service.

This software gathers the vehicle data and makes it available to the end-users by sending it to any service or application that has subscribed to the Adapter webhook service.

Let's wrap it up

Adapter is a complex piece of technology that handles the communication between vehicles and end-users. It’s composed of multiple microservices working together to provide a seamless experience for the users. Each service plays a unique role in the overall architecture, ensuring the secure and efficient delivery of information between the vehicle and the client. Adapter’s robust and flexible architecture enables it to accommodate the ever-evolving needs of the connected vehicle industry, making it a critical component in the future of transportation.

Curious to know more about our technology and the tech-driven team that runs all of this?

About the author

Veronica Iovinella

Senior Engineer at 2hire

Computer engineer with a passion for art. When not coding, you may find me painting, reading, binge-watching tv shows, or, most probably, sleeping.

Posted on February 21, 2023 by Benedetta Biggi

The Microchip Crisis: Facing Global Challenges

13 July 2022 | 2 min read

“ Microchips are in many ways the lifeblood of the modern economy. They power computers, smartphones, cars, appliances and scores of other electronics. But the world’s demand for microchip has surged since the pandemic, which also caused supply-chain disruptions, resulting in a global shortage.” – NY Times

The gloomy economic outlook being sensed worldwide owes so much to different factors – yet the microchip crisis is undoubtedly one of them. Our era of IoT and connectivity demands a level of supply that seems constantly out of reach, and the automotive industry alone has faced disruption after delay thanks to the microchip shortage affecting our industrialized world.

At best, the chip crisis renders vehicles of all kinds far more difficult for consumers to purchase – and at worst, it causes even the most seasoned automotive OEMs to have to halt production at a time when productivity and economic output has never been more important to enliven.

Most concerningly, the chip shortage affecting both the automotive industry and the technology ecosystem at large seems to only catch marginal reprieves – never a full solution.

The question here arises: What has led to this microchip crisis, and what is being done to overcome it?

What caused, and contributes to, the global chip shortage

While the automotive chip shortage has undoubtedly had a big impact on the number of new vehicles entering the market, the entire technology industry is similarly being buffeted by these circumstances – from interactive entertainment to work computers and household electronics.

Yet with many industries recovering post-pandemic, why does the microchip crisis persist?

The ability to source microchips and control mineral resources is directly linked to dominance in strategic technology sectors, and the US and China currently have the widest margin of control.

While it’s easy to blame disruptors such as the growth of working from home or the effect of lockdowns in China, the world is also enduring significant supply chain woes.

Soaring demand versus a lack of rapid production techniques – indeed, POET Technologies CEO Dr. Suresh Venkatesan advises that advanced chips take over 20 weeks to produce – mean that even accelerating production methods today are only barely being felt in tangible results.

What effects is the chip shortage having on the automotive industry?

Production plant shutdowns and difficulties in obtaining the materials necessary for vehicles with even more IoT capabilities mean that the automotive sector has endured a difficult few years under the yoke of the global chip crisis.

After the 40% cut announced by the Japanese Toyota, Stellantis, and Volkswagen had to intervene in August 2021 to cope with the shortage of microchips. Stellantis was forced to stop production at two factories in France, in Janais and Sochaux. Volkswagen announced a production cut at the Wolfsburg factory, the German group’s main one.

And since fewer cars are being produced at higher prices, the leasing, rental, car subscription, and car sharing market is gaining in relevance.

However, further burdens are being felt not only in OEMs’ order books but in their bottom lines. The global chip crisis has fallen amid an era of evolution for automotive OEMs, as the demand to shift from internal combustion engine (ICE) vehicles to battery electric vehicles (BEVs) continues to add complexity to the conversation. Research firm AlixPartners, already recognising that the microchip crisis will cost the automotive sector $210 billion worldwide, has also stated that the increased IoT and technological complexity of BEVs will create more bottlenecks.

How are automotive manufacturers responding to the microchip crisis?

Necessity demands agility, and the automotive industry is turning the tide on the chip shortage not only through better forward planning but also by sidestepping certain restrictions.

For example, software in IoT elements of modern vehicles is being rewritten to use fewer chips for more functionality.

More predominantly, the reshoring of microchip production is being implemented in the United States and worldwide, solving immediate chip crisis woes by keeping production closer to home.

How is the EU helping overcome the microchip crisis?

Expanding on the reshoring idea discussed above, the EU announced in February 2022 the European Chips Act.

This movement aims to centralise much of semiconductor production in European nations, in a bid to improve supply chain resiliency and mitigate the seemingly endless churn of the global chip crisis.

By 2030, the EU aims to more than double European semiconductor research, development and manufacture – leaping from 9% of global output to 20% by the new decade’s dawn.

Big tech businesses are echoing the positive stance the EU is taking against the microchip crisis too, with Intel investing $36 billion into developing European semiconductor production potential, with production coming online by 2027.

According to sources, Intel and the Italian government are discussing a total investment of $9 billion over 10 years from the start of the construction of the factory in the country. Rome wants Intel to clarify its plans for Italy before formalising a package of favourable conditions, especially regarding jobs and energy costs. Once an agreement has been reached between Rome and the American company on the action to take regarding this problem, the next step will be to decide in which area to begin with the construction of the Italian production facility.

Immediately, the global chip shortage remains a key area of concern, for the automotive sector and beyond. Yet hope is on the horizon, and resources are being put into action – causing many to feel optimistic that a difficult few years may soon give way to a reality on the upswing.

Learn more about 2hire world here 👀

Contact us to disrupt mobility together ⚡️

About the author

Benedetta Biggi

Sales and Marketing Associate at 2hire

I love running and daydreaming losing count of the distance I’m covering, cooking (and especially eating) and Drake is my spirit guide.

Posted on July 8, 2022 by Benedetta Biggi

How Artificial Intelligence Can Change the Mobility Industry

Theories and concepts about Artificial Intelligence were born in the 50s. Yet, Artificial Intelligence (AI) achieved functional applicability in the last two decades, with the rise of machine learning and deep learning. Today, everybody is talking about AI, and businesses across various industries have realized that this revolutionary technology can drastically increase the speed and quality of their products and services.

At this stage, AI is on its path to creating a revolution in the mobility industry. Cities around the world are constantly suffering from increasing traffic, limited availability of space in urban areas, traffic-induced noise, and pollution. Meanwhile, cities are becoming more and more connected and harmonized with sensors, and so the possibilities for AI to improve energy efficiency, liveability, and mobility, will grow exponentially.

Let’s take a look at why AI is the key enabling technology in creating individualized, environmental-friendly, and autonomous mobility systems.

Artificial Intelligence and Mobility Systems

The role of AI in mobility systems will undeniably be tied with the development of smart cities. Both concepts are becoming a necessity, as the urban population is increasing constantly and consumer needs are changing every day.

According to research conducted by the United Nations Department of Economic and Social Affairs, 55% of the world’s population lives in urban areas. What’s more, this number is going to rise to 68% by 2050.

This rapid increase of population in major cities will lead to pressure for sustainable environment initiatives, demands for better infrastructure, and better quality of life. Smart cities, powered by AI technology, are a part of the solution to these demanding challenges urban areas face.

To function properly, smart cities require the processing of enormous amounts of data, also known as Big Data. Big Data is described in three terms: high volume, high-velocity datasets, or high-variety information assets. High volume represents massive datasets, high velocity datasets mean they are processed very quickly using algorithms, and high-variety information assets represent the use of different data sources.

When AI and Big Data collaborate, the results can be more than promising. AI is described as a non-human system that learns from experience and imitates human behavior. It can efficiently look through Big Data, and create predictions and cost-effective solutions to drive smart city technologies.

Smart city technologies will play an important role in fixing the ongoing problems of public transit and public safety. When it comes to public transit, cities with large transit infrastructures have realized that they must begin the process of harmonizing the experience of their passengers. Whether passengers are traveling by car, moped, scooter, train, or bus, they can provide real-time information using their mobile apps. As a result, passengers can communicate delays, breakdowns, and find less congested routes.

Once cities gather and analyze public transit usage data, they can make better decisions when improving routes and timings, and distribute infrastructure budgets more accurately.

Dynamic Pricing

Transportation is heavily tied to complex hardware-based ticketing systems that don’t have the flexibility for dynamic pricing. Once transit operators switch to software-based platforms, they will be able to know how many seats have been booked and how many tickets have been sold in real-time. Furthermore, operators can cross-reference these data points with the capacity of trains and busses to propose different prices throughout the day. Using AI, operators can learn from rider patterns and use that data to form a dynamic pricing strategy.

Dynamic pricing has been around since the 70s, and It was mainly used by the airlines for selling airplane tickets. Unlike fixed prices for airplane tickets, prices are chosen based on current demand and other factors. Therefore, prices can change every day and even throughout the day.

Artificial Intelligence can absorb large amounts of data from various sources including historical booking and pricing information, route information, schedule changes, competitor pricing, and web user interaction behavior. Dynamic pricing engines when combined with machine learning, can suggest price structures that can be regulated on a dynamic basis.

When it comes to urban transportation, they contrarily have fixed prices for mass transit. Passengers pay the same price at 1 a.m. as they would pay at 1 p.m. or 3 p.m. This system is pretty counterproductive if the goal is to prevent rush-hour crowds and create better vehicle distribution throughout the day. To fix this, transit operators could apply the highest price during rush hour and apply lower prices during less busy schedules. As a result, this would stimulate drivers to ride when the price is lower, and would also have a positive impact on urban mobility and general healthcare.

Interestingly, ride-hailing companies have implemented a similar strategy called surge pricing. In plain words, they raise prices during peak demand and lower them when there is less demand.

Another similar concept has made a good impact in London, and it is called congestion pricing. Drivers are charged a premium tariff, when they want to drive in or out of the city center, during rush hour.

It may be hard to believe, but a Swedish railway company called SJ has been offering dynamic pricing on its tickets since 2004. Namely, this company has been selling tickets online since 1997, and this is why more than 90% of their passengers are purchasing tickets through dynamic pricing.

Artificial Intelligence Applied to Smart Mobility

The goal of smart mobility systems is to increase safety, reduce traffic congestion, improve air, and reduce noise pollution and costs. Smart mobility systems have been recognized as essential for decarbonizing the transport sector and reaching the EU emission reduction goals. Artificial intelligence is proving to be a powerful tool that has the potential to drive a sustainable transition to more efficient, liveable, and more human-centric mobility systems.

AI, when applied to urban mobility, can rely on data from existing infrastructures such as traffic controller detection, urban centers, video data, fleet data, and public and private third-party data. In this transition, the public sector will play an important role in ensuring that AI solutions are secure, inclusive, and rely on non-biased, fairly shared data that still preserve citizens’ privacy.

The shift towards an AI-driven mobility will bring a positive impact on all the value chains involved. Municipalities and private mobility operators will be working together to get closer to this evolution.

Mobility-as-a-Service

MaaS systems have proven to be a great alternative to personal transport. These systems offer different means of transportation, and users can book, manage, and pay using their smartphones. Also, MaaS is proving to be a key player in reducing traffic congestion, enabling vehicle-free cities, and system-level optimization of mobility investments.

When powered by AI controllers, MaaS systems can optimize, monitor, and coordinate fleets and, at the same time, offer great options to individual users. What’s more, AI-based MaaS can enable ride-sharing users to share autonomous vehicles across an optimized route in a much cheaper and safer way.

The Future Is Here

Self-driving vehicles are finally making their way into the transportation sector. Although the majority of these vehicles are still pilot projects, some companies have already deployed their vehicles on public roads.

Computer vision and deep learning systems are the foundation of self-driving vehicles. They are responsible for processing and giving context to all the data that is received from the sensors. Self-driving cars collect data from various sources such as cameras, radars, LIDAR, etc.

As AI technology continues to evolve, self-driving cars might become increasingly popular among consumers.

What's the next step?

As AI and machine learning continues to evolve, there is no doubt that the mobility industry will be changed forever. In the future, AI assistants will organize our trips and instantly help us find, book, and pay for the best transport option, depending on our situation and needs.

As of now, mobility is such a fast-pacing phenomenon that we always have to be ready for the next change. Just think of the helicopter cab that takes you from Fiumicino airport to Rome, or Elon Musk who wants to replace Los Angeles-Sydney flights with Falcon Xs.

These are just a few novelties worth mentioning, what is certain is that the mobility of this era is the real booster for accessibility and flexibility.

Artificial intelligence will not just change the way we travel, but will also revolutionize our lives in urban areas, by improving energy efficiency, and the overall quality of life.

About the author

Emanuele Loreti

Customer Success Specialist at 2hire

I think that the most accurate adjective which describes me is: curious. My curiosity brings me to define my primary interest: reading. Read entails a contemplative state of mind.

Posted on December 15, 2021 by Benedetta Biggi

Big Data: The Future of Urban Mobility Systems

12 Nov 2021

In this day and age, every industry including transportation records an extraordinary amount of data. Big data has emerged as a result of rapidly decreasing costs of collecting, storing, processing, and dispersing data. This decrease in data storage costs has allowed the possibility of absorbing data rather than discarding it. To quote science professor George Dyson “Big Data is what happened when the cost of storing information became less than the cost of throwing it away”.

In the past, data that was considered insignificant or trivial (digital dust), was discarded. Today, ‘’digital dust’’ is analyzed with sophisticated software, and when merged with other contextual trivial data, it can provide valuable insights.

Let’s take a look at why Big Data is the future of urban mobile operations and how it can help travelers, transportation services, and public agencies make smarter decisions.

Transportation

Without a doubt, we have witnessed the rapid development of software that is changing our transportation. Most people are using mobile websites and apps for a variety of transportation functions including vehicle routing, parking, trip planning, and fare payment.

Most people aren’t aware of the fact that real-time analytics and algorithms are constantly working to improve their travel experience. This includes managing crowdsourced and flexible routing, providing predictive analytics for accurately forecasting and responding to demand, and improving operational responses, when natural or manmade hazards occur.

Although transportation public services already use a vast amount of data in their modeling and operations, Big Data along with data sharing has a much bigger potential to exceed transportation planning and traveler services.

A good example of this was the 2014 Soccer Cup in Rio De Janeiro. Namely, the local government acquired driver navigation data from Google Waze and combined it with the data gathered from the pedestrian transit app Moovit. All this data provided crucial real-time information about the transportation network. As a result, engineers and local transportation planners were able to get ahold of data on half a million drivers and identify operational issues.

Data Extraction

An important factor to consider when selecting a data source is its quality for analysis. Data analytics refers to how all information is extracted from a data set. First, it is categorized into relevant fields such as origin, destination time, longitude, and latitude. Next, a series of operations are performed to clean, transform and model the data to obtain significant conclusions.

At this point, a range of techniques and tools have already been developed to manipulate and visualize Big Data. Furthermore, expertise is drawn from various fields including statistics, computer science, mathematics, and economics. As we can see, there are more than few challenges to face, and that’s why it requires a multidisciplinary approach.

When it comes to transportation planning and urban mobility, spatial analytics are used to extract the topological, geographical, and geometric properties that are encoded inside a data set.

One of the most important factors when selecting a data source is the scope and quality of the data set. Naturally, data extracted from a single source is considered clean and precise.

In reality, data from a single source is often messy and includes incorrect, mislabelled, missing, and even spurious. Because of its heterogeneous nature, it is often incompatible with other sources.

Data Mining and Modeling

Although traditional methods that involve statistics and optimization are still relevant, they have certain limitations when faced with high-velocity data sets. Approaches such as data mining, network analysis, visualization techniques, and pattern recognition have shown much better results when it comes to Big Data.

Rather than assuming a model that describes relationships in the data or requiring specific queries on which to base analysis, data mining lets the data speak for itself. This means it relies on algorithms to discover patterns that are not evident in single or joined data sets.

Data mining algorithms perform different types of operations and these include classification, clustering, regression, association, anomaly detection, and summarization. These approaches can be based on examples provided by human operators. They are used to guide the process using unsupervised operations, in which patterns are detected algorithmically.

Building and running models are crucial for testing hypotheses concerning the importance of different variables in real-world systems. Models can simulate real-life scenarios and, as a result, can characterize, understand, and visualize relationships that are difficult to understand in complex systems.

Using Big Data, the scale, scope, and accessibility of modeling exercises are increased drastically. Through modeling exercises, we can ensure that the right questions are asked and remain essential for providing high-value outputs.

What Does the Future Hold?

Urban mobility is an ongoing problem for cities worldwide. Cities have a giant task of guaranteeing travelers to get from point A to B safely and affordably. Because of this, cities need to have a better understanding of their complex mobility systems. Naturally, this is where Big Data and visualization take over, and so let’s take a look at what the future holds for urban mobility systems.

Infrastructure

As we already mentioned, data analysis can simulate real-life scenarios. Using data, cities can now understand their ecosystem better and answer various crucial questions. This includes travel demand throughout the day, public transport system capacities, bottlenecks, etc.

Using heatmaps, cities can now illustrate their traffic volume, whether it’s neighborhoods, roads, or entire regions. These visualizations can now help traffic planners to optimize infrastructure more easily.

Traffic congestion

According to Inrix and Tom Tom, traffic volume dropped due to the global pandemic, and also fewer vehicles on the road in 2020 resulted in drivers saving money due to the lack of congestion. However, traffic jams are still a huge problem in cities around the world. They produce large quantities of carbon dioxide and therefore raise public health risks and medical treatment costs.

Traffic congestions can be avoided with the help of transportation data. Three modules make up the general framework of this data-analytics-based traffic flow prediction‒ data collection module, data analytics-based module, and application module.

Data Collection Module

Transportation data can be collected from various vehicle-mounted devices including GPS, WiFi, Bluetooth, and RFID. For instance, shared bikes can be equipped with GPS, WiFi, and Bluetooth for tracking. As a result, we can see when the bikes are used and their traveling trajectories. However, micro-mobility vehicles have one big disadvantage: they are small in size and this affects their visibility on the road which causes crashes on the road.

Data Analytics-Based Module

Transportation data can now be analyzed with methods such as deep learning, classification, ranking, and regression to predict traffic flow. Technologies such as the time series model, deep- learning-based predictor, Markov chain model, and the combination of neural networks, can be used for data-analytics-based prediction.

Application Module

Predicted results can be used to support many applications for improving the quality of life in cities. This includes transportation planning, transportation management, and city management and planning

Long-Term Traffic Planning

Cities have an ongoing problem with construction work. It creates constant disruptions in traffic and cities are forced to create changes in routing. Using data analytics, cities can now look at possible scenarios that can have an impact on traffic flow.

A good example of this method in action is the district Harburg in Hamburg. The city had many construction projects planned for 2021, which required full or partial road closures. The Authorities used simulation software to plan detours. The software showed that these alternative roads will end up clogged, and so they decided to begin construction projects at different times.

Short-Term Traffic Planning

Cities can now use software to simulate incidents or events that usually affect the road network. This can help the authorities to stabilize the traffic situations by suggesting alternate routes and sharing them on the news. Hamburg is once again a good example of putting this strategy to good use. Namely, in 2020 the environmental movement ‘’Extinction Rebellion’’ blocked all access to Hamburg’s Köhlbrand bridge. However, Hamburg’s police had already simulated this kind of street closure and were able to instantly make clever decisions.

Final Word

Big Data and predictive analytics are creating an impact on the mobility industry. However, we are also witnessing an exponential growth of global data, enhanced by emerging technologies such as automated vehicles, drones, automated aerial vehicles, robotic deliveries, etc. Once these technologies become the new standard, we will surely see the true power of Big Data and predictive analytics.

About the author

Leandro Nesi

Data Scientist at 2hire

Less is more. I look for science and numbers in all my interests and emotions with the people I surround myself with.

Posted on December 3, 2021 by Benedetta Biggi